How to set up separately-encrypted dual-booting Linux systems on hybrid storage

It’s time to rebuild my computer setup. This series of blog posts will document how I did it, mainly as documentation for my own reference in case I need to re-build anything in the future, but hopefully it helps someone else out there with similar needs.

Some context

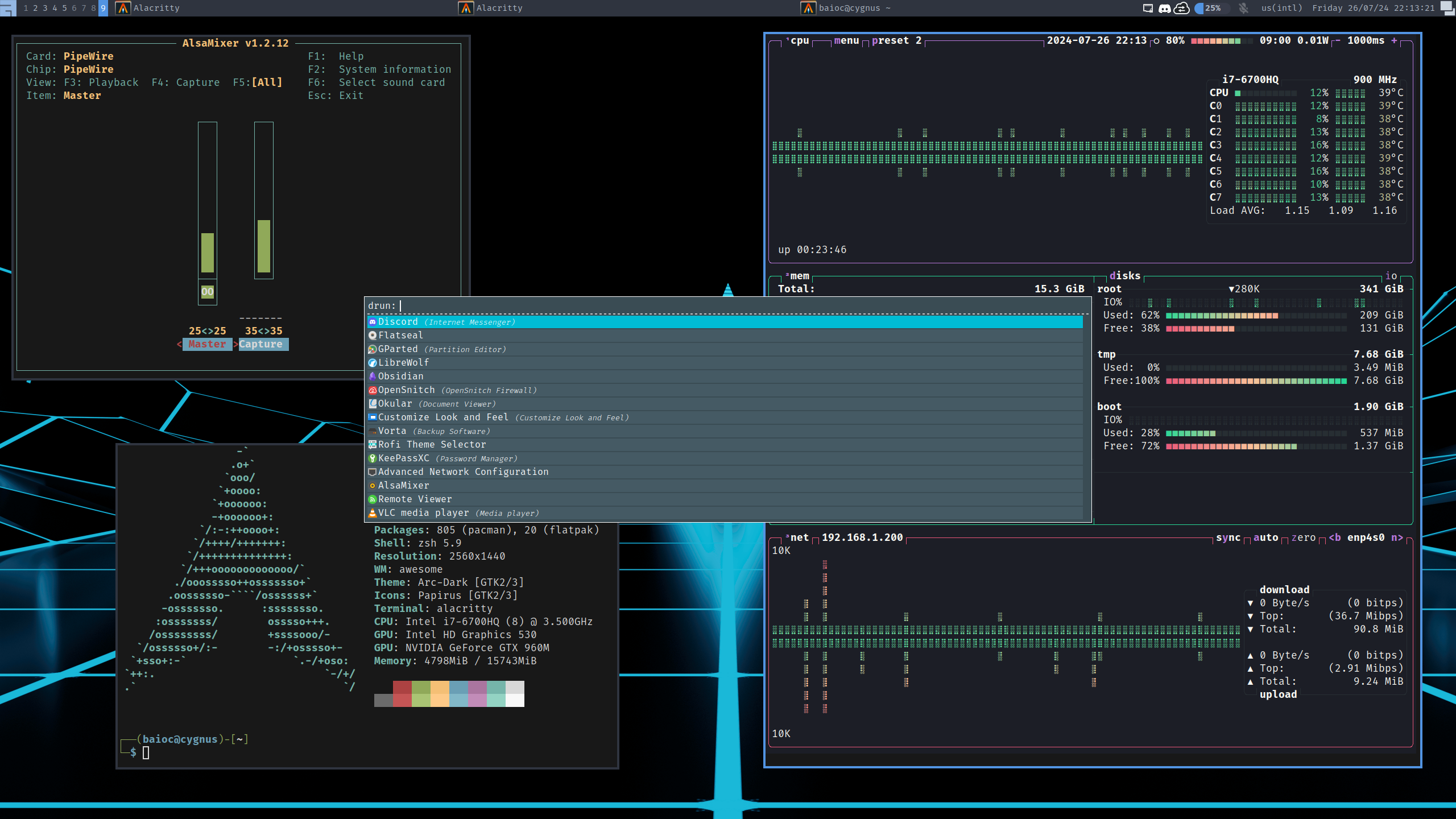

I’ve been using the same Dell laptop for the past ~7 years and have gone through:

- Multiple update-revert cycles on top of the original Windows-only setup

- Resizing Windows partitions in order to dual-boot Manjaro Linux

- Upgrades in phyiscal memory, a battery swap and a new SSD

- Deleting Manjaro from the HDD while distro-hopping to System76’s Pop!_OS Linux

- Corrupting data in the older HDD because of a power loss while moving partitions

- Restoring said data (phew!) from my Borg backups

- Fully abandoning Windows, yet keeping it installed in the HDD “just in case”

Since my new job requires me to use a specific OS, I figured that this would be a good opportunity to start from scratch in order to make better use of the hardware I have. I’ll keep using the same laptop, of course, since it still works well and is powerful enough for all my needs (including gaming from time to time).

My hardware specs. are:

- A Dell motherboard/firmware with support for UEFI boot

- An Intel x86-64 processor with integrated graphics

- A discrete Nvidia GPU with 4 GiB VRAM

- 16 GiB DDR3 phyiscal memory

- An old 5400 RPM HDD with ~1 TB capacity

- A newer M.2 2280 SSD with ~480 GB capacity

What I need/want from the resulting system is:

- A standard Ubuntu 24.04 LTS install (+encryption), for work

- A fully-custom Arch Linux system, for personal use

- Be able to quickly (re)boot and choose an(other) OS for the occasion

- Security for both personal and work-related data

- Make good use of my hardware, especially the SSD and GPU

- Get the most out of my laptop’s battery life, when unplugged

Additionally:

- My HDD is pretty old at this point (in use for 7+ years!), so I have to assume that it may start failing at any moment.

- On the other hand, I’ve heard some horror stories about SSD sudden death, so I want to be prepared for failures there as well.

- I’m not worried about cutomizing my work OS that much, so a fresh reinstall shouldn’t be much of an issue.

- Furthermore, I expect work-related storage to be mostly build artifacts and caches, which can always be recovered through a clean build from the (version-controlled, cloud-backed) sources.

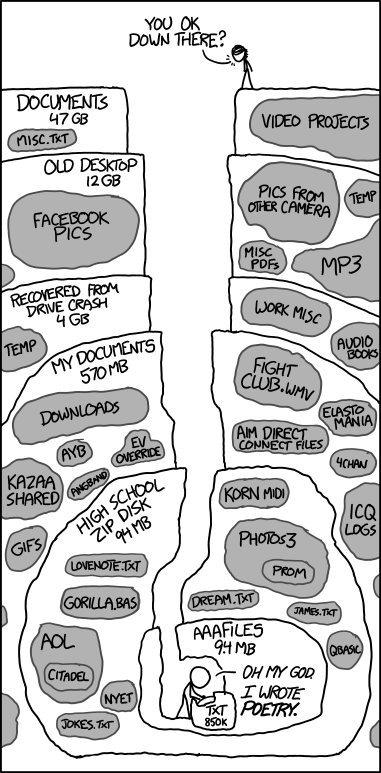

- I probably don’t need more than 400 GiB for my personal data + OS, at least based on usage in my current setup.

A first sketch

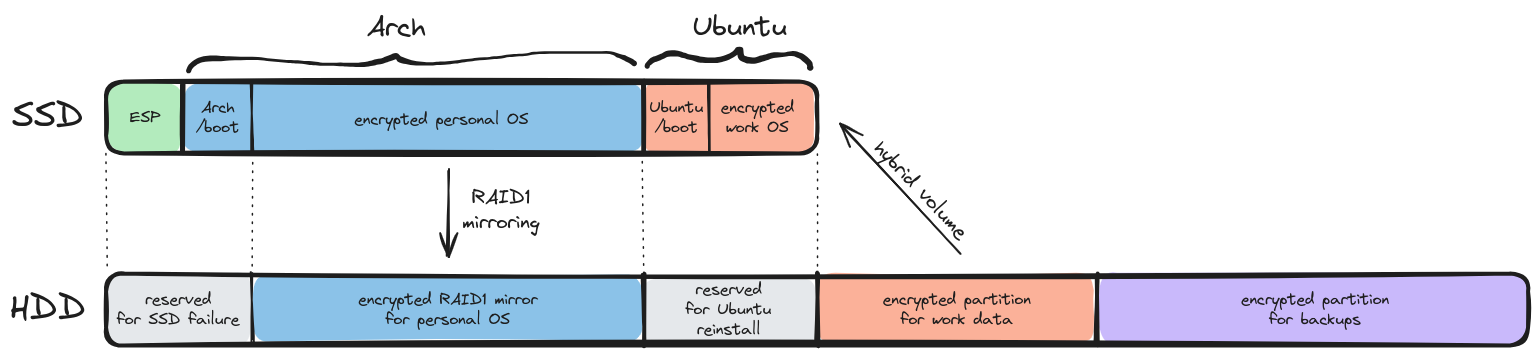

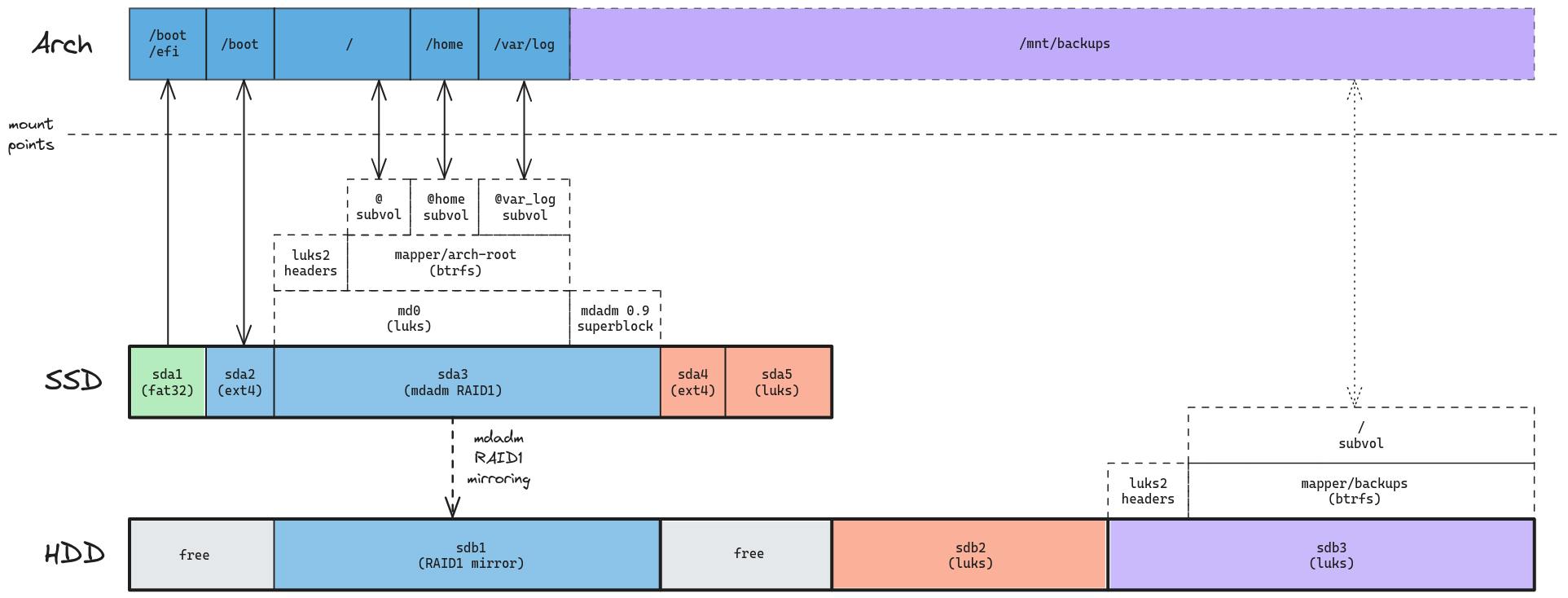

After doing some research and a few VM tests, here’s what I want the system to look like, at a high level:

A few things to note:

- There’s a single ESP partition on the system.

- According to this, the UEFI specification does not require support for multiple ESPs.

- This partiton has to formatted as FAT32.

- Each OS gets its own unencrypted

/bootpartition.- They are unencrypted because most bootloaders don’t support encryption. GRUB is a bit of an exception, but it still doesn’t support default LUKS2 encryption.

- Each OS gets to manage its own boot partition, reducing the chances that they’ll mess up each other’s boot.

- Both boot partitions will be formatted using ext4. This is how Ubuntu’s 24.04 installer does it, and how I chose to do it (as opposed to using ext2) after reading this.

- Personal stuff, work stuff and backups are all separately encrypted.

- This is for added security: a compromised user/service in the work OS won’t be able to steal my personal data and vice-versa.

- Most of the SSD is filled with personal stuff

- This is needed in order for me to fit everything I have in the SSD. I don’t want to lose my files if/when the HDD fails.

- Even if the SSD fails, the RAID1 mirror ensures that I’ll be able to reboot and continue working on my own personal stuff on a short notice (NOTE: this does NOT substitue external backups).

- If that happens, it should be pretty straightforward to disable RAID1.

- Booting from the HDD mirror in case of an SSD failure will require me to have (a) ESP and

/bootbackups in the last HDD partition and (b) a live ISO which I can boot from and restore those partitions.

- The work installation will be split between SSD and HDD.

- This is because most of the SSD storage is taken by personal stuff.

- If the HDD fails, I’ll lose some build artifacts / caches but will still be able to keep working in the storage-constrained environment of the SSD.

- If the SSD fails, I can just reinstall the OS from scratch in the reserved HDD space.

- Lack of any swap partitions.

- On a fully-encrypted system, it seems that swap files will be easier to manage than swap partitions.

Btrfs vs LVM-ext4

LUKS appears to be the current standard for disk encryption on Linux dm-crypt. It works by creating a “virtual” block device over an existing one and transparently translating reads and writes to read+decrypt and encrypt+write operations.

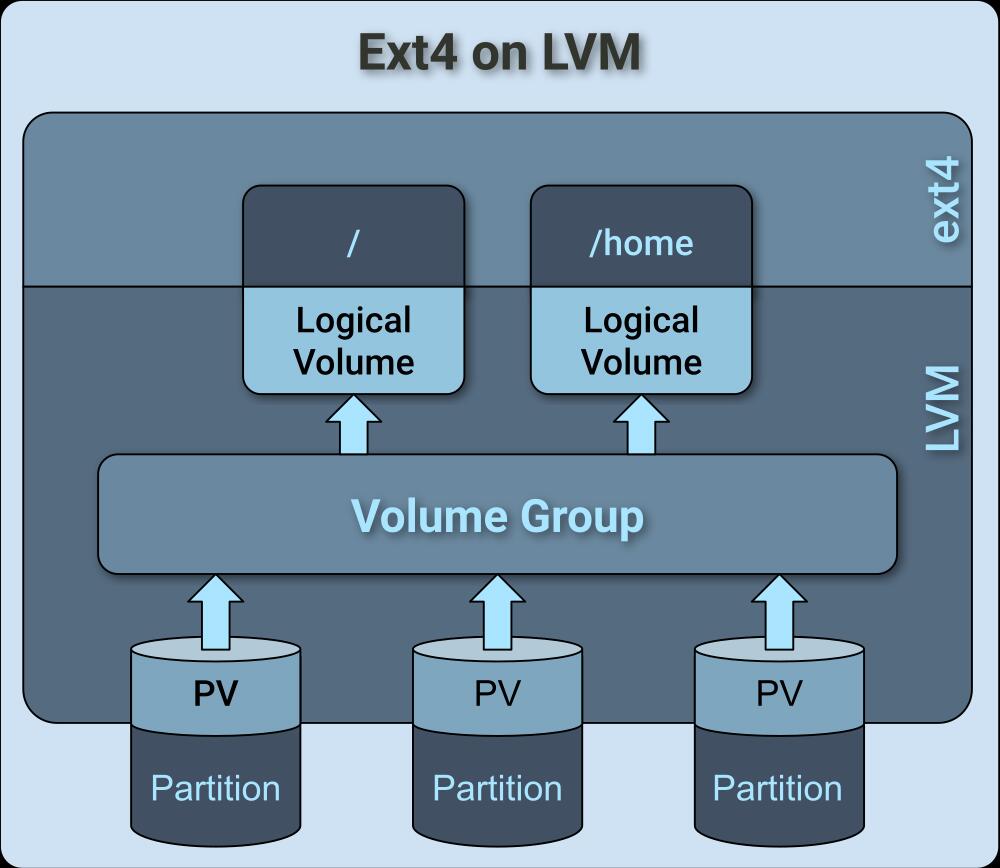

By default, each encrypted device must be unlocked separately, which can be annoying for multi-partition systems. Therefore, most people recommend setting up a flexible filesystem on top of LUKS in order to have a single unlock for all logical partitions needed by the system. Ubuntu’s 24.04 installer uses ext4 partitions on top of LVM on top of LUKS, whereas my current Pop!_OS system was set up with Btrfs on top of LVM on top of LUKS.

While LVM and Btrfs are not mutually exclusive, they have similar features:

- Volume management: both can distribute storage across multiple devices, but in LVM you have to manually resize logical volumes.

- Snapshots: both can create (sub)volume snapshots for backups, but I think Btrfs’s are faster because of CoW.

- RAID: both provide support for RAID, but Btrfs’ is less mature.

- Compression: Btrfs has transparent compression and deduplication.

- Caching: Btrfs does not have an equivalent of LVM’s

lvmcachefor hybrid storage, unless you count hybrid RAID1 (but that requires equal-sized block devices).

While I’ll keep the default LUKS -> LVM -> ext4 used in Ubuntu 24.04, I’ll set up Btrfs on Arch because:

- I don’t want to explicitly resize subvolumes.

- Arch is a rolling release distro, so I’ll want fast snapshots on every update.

- I want a RAID1 solution that works under LUKS (such as

mdadm), not on top of it, so I wouldn’t be using LVM or Btrfs for their RAID features anyway. - Btrfs’s compression and deduplication should help reduce SSD disk usage.

- I don’t need a hybrid storage cache for the Arch system, since it will fit entirely in the SSD.

Since I’ll have my personal stuff on top of Btrfs, I might as well use it in the HDD backup partition. That will allow me to use Btrfs’s send/receive operations in order to easily move local snapshots to the HDD, and then later from the HDD to an external backup device.

With that in mind, here’s how I expect my personal OS to look like after booting:

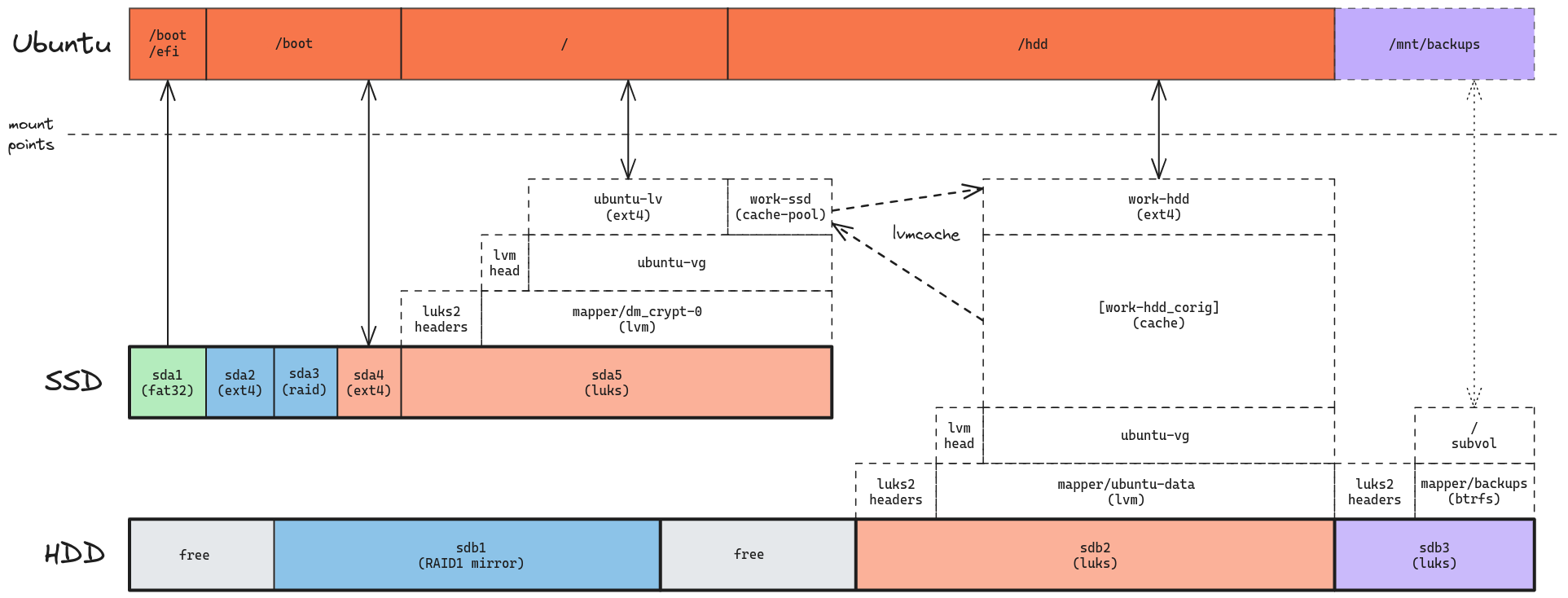

lvmcache vs bcache

I’m going to reserve 100 GiB in the SSD for my work system. Depending on the development tools I use, I know that’s not going to be enough storage, in which case I’ll need to store data in the (5x slower) HDD as well.

Setups like this are pretty common nowadays: just keep your base OS installed in the SSD, while large(r) files go in the slower HDD. Since frequent access to large files could also benefit from SSD speed ups, some people will go the extra mile and move hot data to the SSD, then place them back on the HDD when they’re no longer being used that often.

The thing is: I don’t want to do that kind of optimization manually, transferring files back and forth between disks. This is precisely why I’m interested in setting up a hybrid storage solution, in which the SSD acts as a cache to the HDD.

It seems that the standard options for doing this on Linux are lvmcache and bcache. Here’s a few points I gathered while researching those technologies:

- lvmcache runs on top of LVM, which I’ll already be using in the Ubuntu install.

- LVM snapshots are not possible on a cached volume; that’s OK for me but could be a deal breaker for others.

- In an encrypted system, bcache should sit between LUKS and the file system, and I don’t see how to do that without deviating from the standard Ubuntu 24.04 encrypted install.

- A few comments on Reddit and a specific blog post convinced me that bcache had many issues back in 2020-2021; I’m not sure if those were fixed since then.

- On the other hand, I see people happily using lvmcache in 2022.

In case it wasn’t obvious, I’ll be going with lvmcache for my work OS:

Next steps

Of course, the next step is actually implementing these designs.

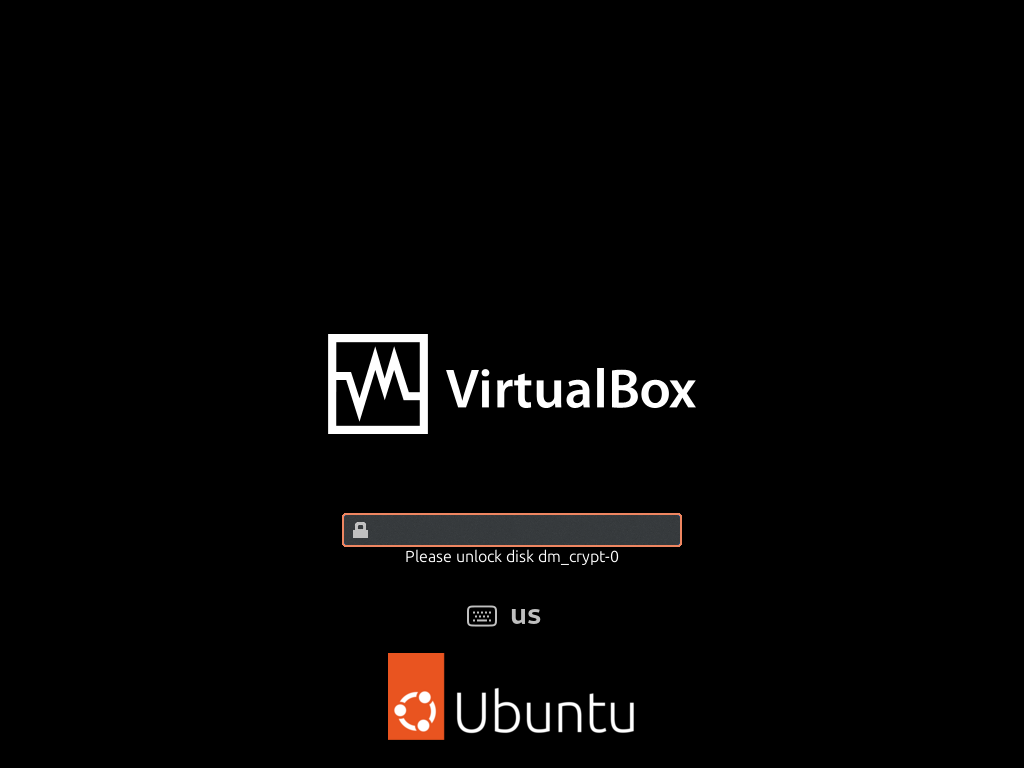

I’ll set up everything in a VM first and document how I did it in the next few blog posts. Then, if everything works, I’ll apply it to my actual machine.

See you in the next part!

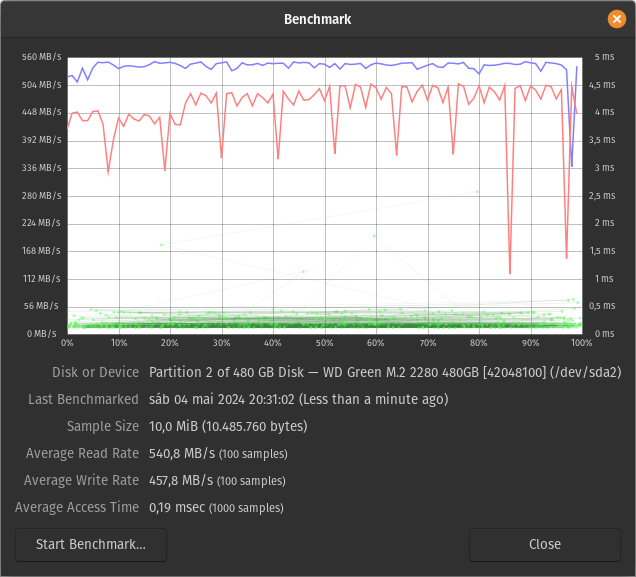

Appendix A - SSD vs HDD speeds

I wanted to understand just how faster my M.2 2280 SSD was over my old 5400 RPM HDD, so I ran a few tests using hdparm and gnome-disk-utility.

I started by carving out 2 GiB partitions from the free space on each drive:

/dev/sda2is on the SSD/dev/sdb7is on the HDD

Running the default GNOME Disks R/W benchmark on the unformatted partitions yields the following results (read rates can be confirmed by a few runs of hdparm -t):

| Device | Average Read Rate | Average Write Rate |

|---|---|---|

| SSD | 540.8 MB/s | 457.8 MB/s |

| HDD | 112.1 MB/s | 93.2 MB/s |

Appendix B - mdadm vs Btrfs for hybrid (SSD+HDD) RAID1

While mdadm is the default option for software RAID on Linux, I found out that Btrfs supports RAID1 natively. Therefore, I could choose between the following stacks for my personal OS:

mdadm RAID1 -> LUKS -> Btrfs | LUKS -> Btrfs with RAID1

|

SSD: [ mdadm | LUKS | Btrfs ] | SSD: [ LUKS | Btrfs ]

| | |

| RAID1 mirroring | | RAID1 mirroring

v | v

HHD: [ mdadm | LUKS | Btrfs ] | HDD: [ LUKS | Btrfs ]

If I wasn’t using disk encryption, I might have gone with the simpler Btrfs-only stack. However, since Btrfs sits on top of LUKS, RAID1 would only kick in after both partitions were decrypted.

Another issue is performance on a hybrid storage setup: according to this answer, “the btrfs raid 1 disk access algorithms work by reading from one disk for even-numbered PIDs, and the other for odd-numbered PIDs”.

In other words: disk access speed will suck for half of your processes.

Online discussions pointed me to the source code, which does appear to be distributing reads based on PIDs as per a BTRFS_READ_POLICY_PID.

In conclusion: I’ll forget about Btrfs RAID1 for now. Maybe in the future, when it provides a better RAID1 access distribution policy (and maybe native encryption, I’ve heard that’s on the roadmap), I’ll consider it again for a setup like mine.